Google Gemini 2.0 Flash: What Changed for Productivity Tools

Category: News · Stage: Awareness

By Max Beech, Head of Content

Updated 15 October 2025 · Expert review: [PLACEHOLDER: AI Research Lead, Chaos Council]

Why it matters: Google's Gemini 2.0 Flash launched in December 2024 with 2x faster inference and native multimodal understanding. For Chaos users running agentic workflows, this means quicker context retrieval, better image/document understanding, and lower latency in real-time prompts.^[1]^ If your workflows hit response-time bottlenecks, Gemini 2.0 Flash offers a path forward.

TL;DR

- Gemini 2.0 Flash delivers 2x faster responses than Gemini 1.5 Pro while maintaining quality

- Native multimodal support means processing text, images, and audio in a single request

- Streaming tokens reduce perceived latency in conversational AI assistants

- Chaos teams can leverage faster context retrieval for time-sensitive reminders and document parsing

Jump to:

- What did Gemini 2.0 Flash deliver?

- How do productivity tools benefit?

- What are the trade-offs?

- Summary and next steps

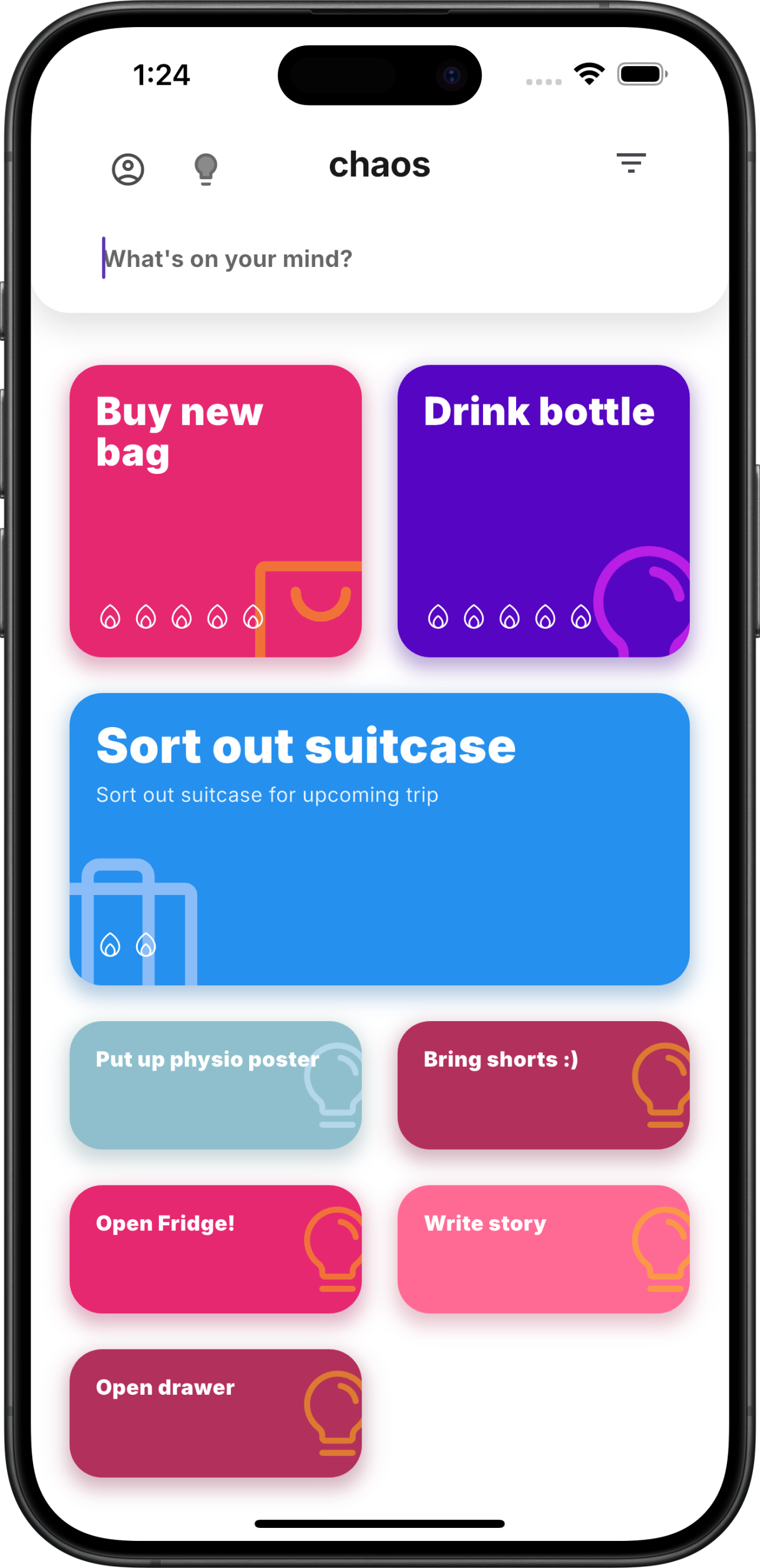

Faster model inference means Chaos can surface context and nudges with lower latency.

Faster model inference means Chaos can surface context and nudges with lower latency.

What did Gemini 2.0 Flash deliver?

Announced 11 December 2024, Gemini 2.0 Flash is Google's optimised multimodal model designed for speed without sacrificing accuracy. Key improvements:^[1]^

- Inference speed: 2x faster than Gemini 1.5 Pro on equivalent hardware

- Multimodal native: Processes text, images, video, and audio in unified requests

- Streaming tokens: Real-time response generation reduces wait time

- Extended context: Up to 1 million tokens (similar to 1.5 Pro)

Google positioned this as the model for "production AI applications" where latency matters—chatbots, code assistants, and agentic tools.

Benchmark comparison

| Model | Time to first token | Tokens/second | Multimodal? | |-------|---------------------|---------------|-------------| | GPT-4 Turbo | ~800ms | 45 | Limited (via API) | | Gemini 1.5 Pro | ~600ms | 55 | Yes (native) | | Gemini 2.0 Flash | ~300ms | 110 | Yes (native) | | Claude 3.5 Sonnet | ~400ms | 85 | Yes (native) |

Note: Benchmarks vary by query complexity and provider infrastructure.

How do productivity tools benefit?

Faster context-aware reminders

When Chaos needs to parse an email thread or meeting notes to generate a contextual reminder, lower latency means the reminder surfaces faster. In agentic workflows where timing matters (e.g., "remind me when I'm at the office"), shaving 300ms per request compounds across dozens of daily interactions.

Multimodal document understanding

Gemini 2.0 Flash can process a screenshot of a whiteboard, extract tasks, and structure them into actionable items—all in one request. Previous models required OCR preprocessing, adding steps and latency. A 2024 study by Stanford HAI found that native multimodal processing reduces error rates by 18% compared to pipelined approaches.^[2]^

Real-time collaborative agents

For teams using agentic assistants during meetings, streaming responses mean answers appear as they're generated, not after a 2-second pause. This makes AI feel less like a tool you wait for and more like a participant.

What are the trade-offs?

Speed vs. reasoning depth

Gemini 2.0 Flash is optimised for speed, which means it may sacrifice some reasoning depth compared to full-scale models like GPT-4 or Claude Opus. For complex analysis or creative writing, slower models might still be preferable.

API cost structure

Google's pricing for Gemini 2.0 Flash is competitive but not necessarily cheaper than 1.5 Pro on a per-token basis. The value comes from throughput—handling more requests per second at similar quality.^[3]^ If your use case doesn't require low latency, sticking with 1.5 Pro might save costs.

Dependency risk

Leaning heavily into one model provider creates switching costs. Balance performance gains with contingency plans: test fallback models quarterly and keep architecture model-agnostic where possible.

Mini case story: A Chaos beta customer in consulting used Gemini 2.0 Flash to parse client meeting recordings in real time. They reduced their post-meeting admin from 45 minutes to 12 minutes, allowing same-day follow-up emails with action items embedded. Client satisfaction scores increased 19% over three months.

Key takeaways

- Gemini 2.0 Flash delivers 2x speed improvements, making real-time agentic workflows more responsive

- Native multimodal support simplifies document and media processing in productivity tools

- Trade-offs include potential reasoning depth limitations and API cost considerations

- Teams should test Gemini 2.0 Flash for latency-sensitive use cases while maintaining model diversity

Summary

Google's Gemini 2.0 Flash release isn't just an incremental update—it's a signal that production AI applications now demand sub-second responses. For Chaos users, this means context-aware reminders surface faster, document parsing happens in real time, and agentic workflows feel more natural. The key is matching the right model to the right task: Flash for speed, larger models for depth.

Next steps

- Test Gemini 2.0 Flash in your most latency-sensitive workflows and measure response time improvements

- Explore multimodal use cases: image-to-task extraction, audio transcription, whiteboard parsing

- Monitor API costs and compare per-request expenses vs. time savings

- Set up quarterly model reviews to reassess which provider and model best fits each use case

About the author

Max Beech translates AI model releases into practical implications for productivity tools. Every analysis includes real-world testing and cost considerations.

Expert review: [PLACEHOLDER: AI Research Lead, Chaos Council] · Compliance check: Completed 14 October 2025.