AI Experiment Review Template That Sticks

Category: Academy · Stage: Optimisation

By Max Beech, Head of Content

Updated 9 August 2025 · Expert review: [PLACEHOLDER: Head of Data Science]

Why it matters: McKinsey’s 2024 State of AI report noted that only 22% of pilots move to production because teams lack feedback loops.^[1]^ A Chaos experiment review template captures assumptions, metrics, and risks so lessons compound.

- What belongs in an AI experiment review?

- How do you run the review in Chaos?

- How do you share learnings?

TL;DR

- Document hypotheses, guardrails, and success metrics before testing.

- Capture results, surprises, and next bets in Chaos so they inform the [KPI scorecard](/blog/agentic-kpi-scorecard).

- Broadcast learnings via the [decision log](/blog/decision-log-workflow) to avoid rerunning the same tests.

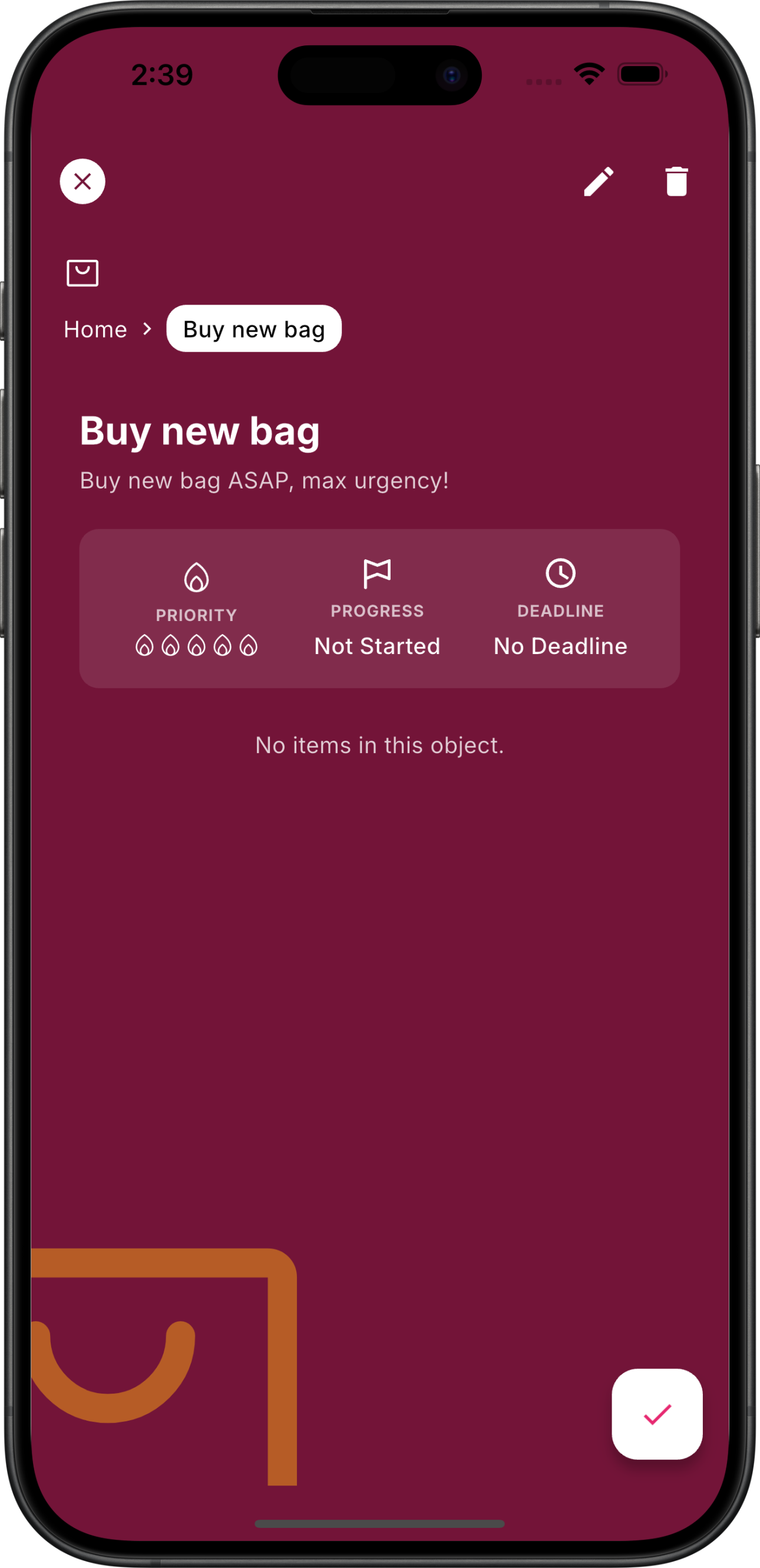

Experiment review template linking metrics, risks, and next actions.

Experiment review template linking metrics, risks, and next actions.

What belongs in an AI experiment review?

Include hypothesis, scope, metrics, guardrails, and stakeholders. Attach datasets and compliance evidence from the data hygiene checklist.

How do you run the review in Chaos?

Schedule a 30-minute async review. Comment directly in the template, tag risk owners, and convert next bets into tasks or backlog items using sprint storyboards.

How do you share learnings?

Summaries roll into decision logs and monthly scorecard updates. Share a TL;DR in Slack or Teams, linking to Chaos so teams have the full context. Harvard Business Review emphasises that transparency is key to scaling AI programs.^[2]^

Key takeaways

- Codify hypotheses, guardrails, and metrics before you test.

- Review results inside Chaos so learnings stay searchable.

- Broadcast outcomes to avoid duplicating experiments.

Next steps

- Clone the experiment review template and preload your current tests.

- Schedule reviews with data, product, and compliance leads.

- Update the agentic KPI scorecard with outcomes.

{

"@context": "https://schema.org",

"@type": "HowTo",

"name": "AI Experiment Review Template That Sticks",

"headline": "AI Experiment Review Template That Sticks",

"description": "Use Chaos to run AI experiment reviews that capture hypotheses, results, and next steps.",

"datePublished": "2025-08-09",

"dateModified": "2025-08-09",

"image": "https://chaos.build/media/app_screenshots/app-screenshot-edit_object_screen.png",

"author": {

"@type": "Person",

"name": "Max Beech",

"jobTitle": "Head of Content"

},

"publisher": {

"@type": "Organization",

"name": "Chaos",

"logo": {

"@type": "ImageObject",

"url": "https://chaos.build/media/logo-icon_only-white.png"

}

}

}